Flash Parking: Consumer Sentiment Analysis

Flash Parking: Consumer Sentiment Analysis

Flash Parking · Industry-Sponsored · University of Michigan · 2023

This project, developed in collaboration with FLASH Parking and the University of Michigan MDP program, aims to build an automated system to measure customer satisfaction from kiosk interactions using multimodal visual and audio data.

Note: Unlike traditional academic research, this industry-sponsored project focused on creating a practical, deployable solution for FLASH Parking’s real-world needs. Our goal wasn’t to chase state-of-the-art benchmarks, but to design a system that works reliably within real operational constraints — from limited onboard computing power to deployment scalability. This experience taught us how to balance technical innovation with practicality, turning cutting-edge research ideas into something that can actually run in the field.

Faculty Mentor: Dr. Kayvan Najarian

Sponsor Mentors: Hunter Dunbar, Edward Hunter

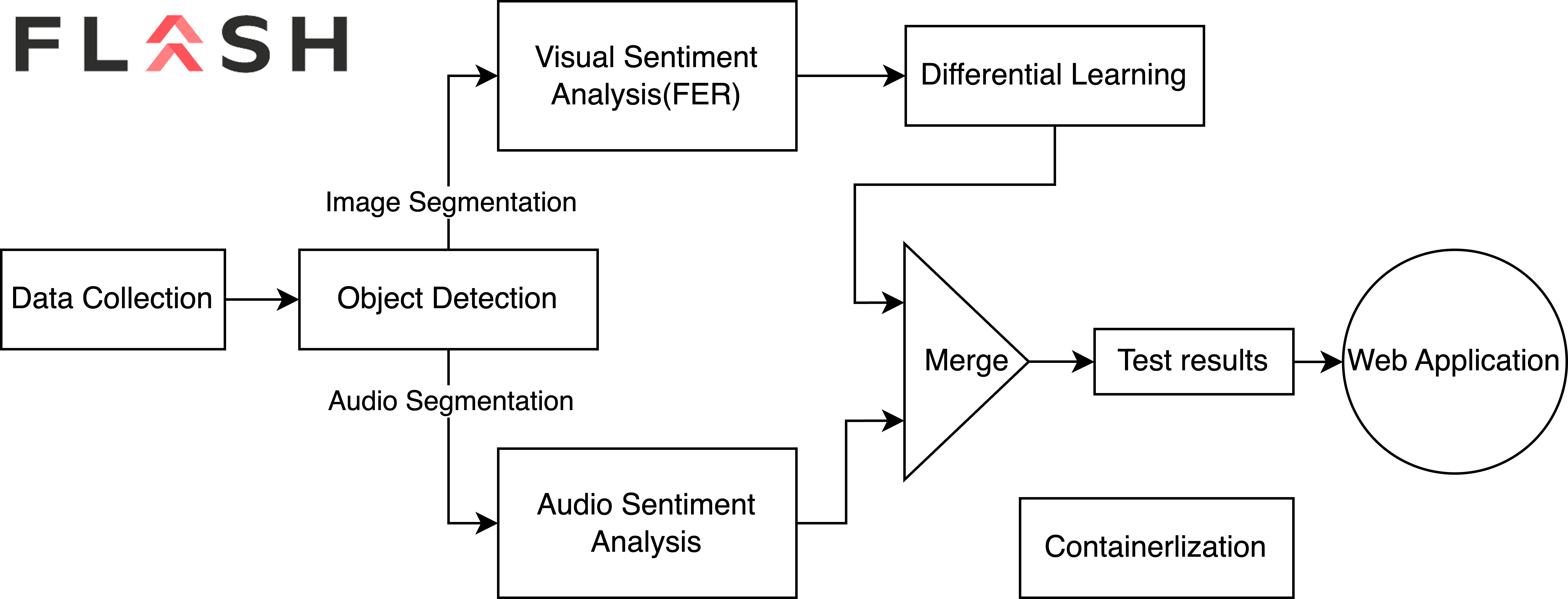

System Architecture

The system integrates three core components working in parallel:

- Object Detection – Detects human faces and extracts relevant audio from kiosk video feeds

- Visual Sentiment Analysis – Classifies facial expressions and emotional states

- Audio Sentiment Analysis – Analyzes emotional tone in speech

System Output: Binary sentiment label (Positive/Negative) and a multimodal confidence score [0, 1]

Methodology

Object Detection Module

Identifies customer faces from kiosk video feeds and triggers synchronized audio recording. Built using YOLOv8 trained on a custom dataset of 3,500+ annotated images with data augmentation including rotation, shear, and grayscale conversion for lighting robustness. The model achieved 96.3% mAP with 91.2% precision and 91.8% recall, producing cropped face images and audio files for downstream analysis.

Visual Sentiment Analysis

Classifies facial expressions using a Vision Transformer (ViT) pre-trained on ImageNet-21K and fine-tuned on the FER+ dataset (28,709 training images). The model significantly outperformed the legacy CNN approach, achieving AUC of 0.937 compared to 0.76. A key innovation is differential learning, which analyzes emotional trends across video frames using regression slope analysis, enabling dynamic sentiment weighting based on temporal patterns rather than isolated snapshots.

Audio Sentiment Analysis

Analyzes emotional tone in customer speech using a BiLSTM with attention mechanism. The system first converts audio to text using speech recognition (95.1% accuracy), then the BiLSTM encoder captures bidirectional sentence context while the attention layer emphasizes emotionally significant words. Trained on the dair-ai/emotion dataset with emotions reclassified to binary (positive: joy, love, surprise; negative: anger, fear, sadness), the model achieved 89.2% accuracy and 0.94 AUROC.

Results & Impact

The integrated multimodal system delivers reliable real-time customer satisfaction scores by combining face detection, visual sentiment analysis, and audio emotion recognition. The system processes live video feeds with face detection overlay, tracks emotional trends across time, and outputs continuous sentiment scores with confidence metrics. The containerized deployment package is ready for integration with FLASH Parking kiosk infrastructure.