Luyang Hu (胡路杨)

huluyang@seas.upenn.edu / hulu@umich.edu

Philadelphia, PA 19104

I’m a second-year Robotics M.S.E. student in the GRASP Lab at the University of Pennsylvania, advised by Prof. Antonio Loquercio and Prof. Dinesh Jayaraman.

I earned my bachelor’s degree in Computer Science, Data Science, and Linguistics from the University of Michigan, where I worked with Prof. Joyce Chai on how semantic knowledge can guide robot planning and policy learning.

At Penn, my research focuses on scalable and physics-grounded robot learning for manipulation and humanoid control. I build systems that leverage foundation models, large-scale simulation, and human motion capture to teach robots generalizable behaviors across sensing modalities and embodiments. Looking ahead, I’m particularly interested in how robots can move beyond passive data scaling —learning efficiently through predictive world models and information-aware representations that enable adaptive, resource-efficient embodied intelligence.

My broader interests lie in embodied intelligence, robot learning, and data-driven methods that connect human and robotic understanding. As I approach the end of my master’s training, I’m eager to continue pursuing research and am actively seeking PhD opportunities.

Outside the lab, it’s sim2real for me: ⛰️ trails, 🎾 courts, and 🎞️ 35 mm frames.

Link to my CV (last update: Nov 2025).

News

| Jan 15, 2025 | Our paper RoSHI: A Versatile Robot-oriented Suit for Human Data In-the-Wild has been submitted to ICRA 2026! |

|---|---|

| Aug 27, 2024 | Joined the GRASP Lab at the University of Pennsylvania |

| May 08, 2024 | Graduated from the University of Michigan. Forever go blue |

Selected Publications

-

RoSHI: A Versatile Robot-oriented Suit for Human Data In-the-WildICRA 2026(under review), 2025

RoSHI: A Versatile Robot-oriented Suit for Human Data In-the-WildICRA 2026(under review), 2025We present RoSHI, a low-cost wearable that fuses 9 IMUs with Aria glasses to capture full-body motion, articulated hands, and egocentric video. Our design emphasizes long-horizon stability and occlusion robustness for humanoid learning. We introduce a simulation-in-the-loop retargeting framework that converts human data into physically feasible robot actions. 61.9% of captured sequences successfully deployed on a Unitree G1 humanoid, providing a scalable foundation for human-to-humanoid imitation learning.

-

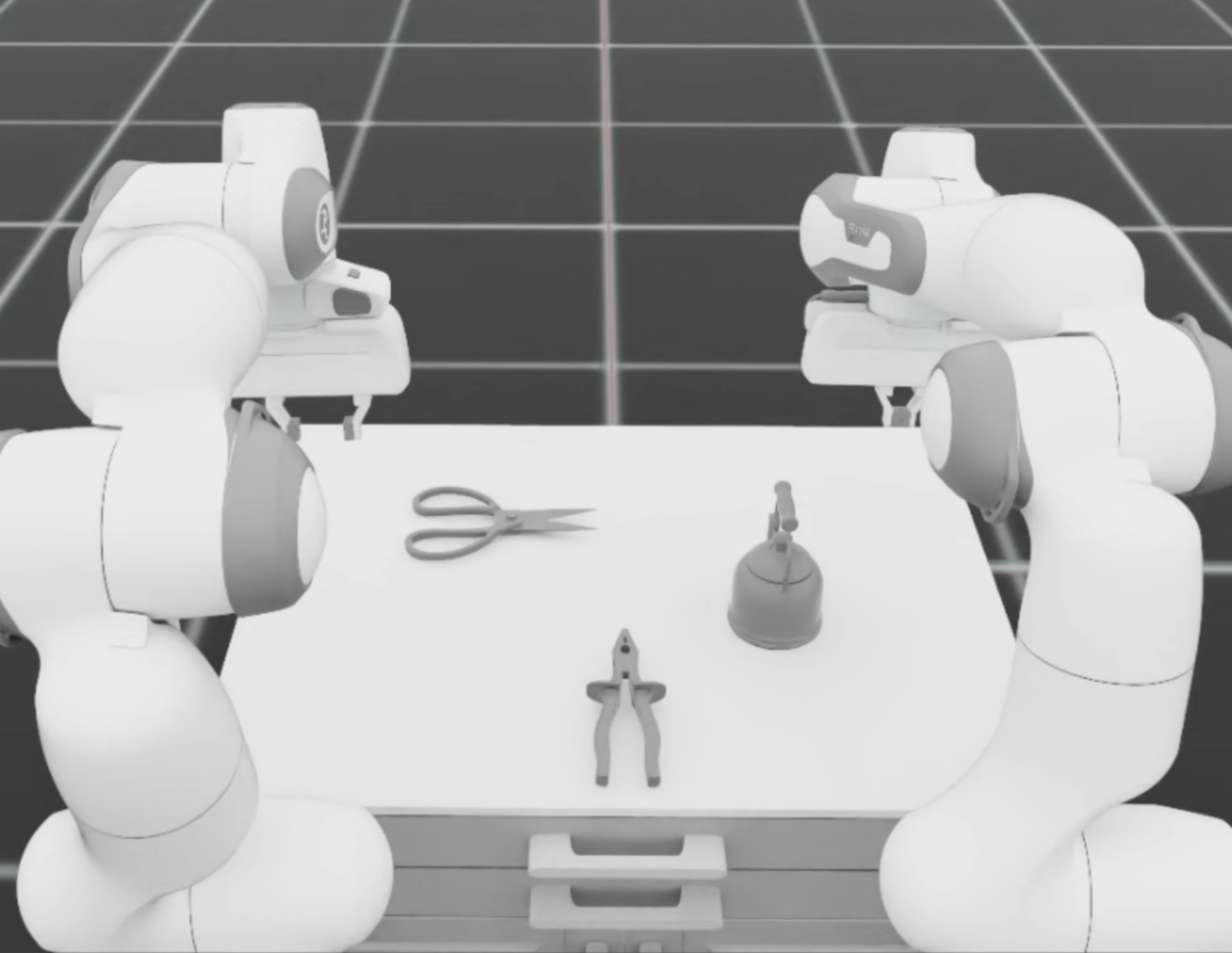

EUREKAWORLD: Scalable Real-World Manipulation via LLM-Automated RLRSS 2026(expected submission), 2025

EUREKAWORLD: Scalable Real-World Manipulation via LLM-Automated RLRSS 2026(expected submission), 2025We present Eureka for Manipulation, a large-scale RL framework that integrates LLMs to automate environment setup, reward shaping, and curriculum design for dexterous manipulation. Leveraging multi-GPU compute, our system couples LLM-guided simulation construction with massive RL optimization to generate diverse digital twins and achieve zero-shot sim-to-real transfer. We demonstrate robust transfer across manipulation tasks from single-arm tool use to bimanual coordination, and propose a paradigm for scalable reproducibility.

-

Flash Parking: Consumer Sentiment Analysis

Flash Parking: Consumer Sentiment Analysis